Sim2Real Gap: Why Machine Learning Hasn’t Solved Robotics Yet

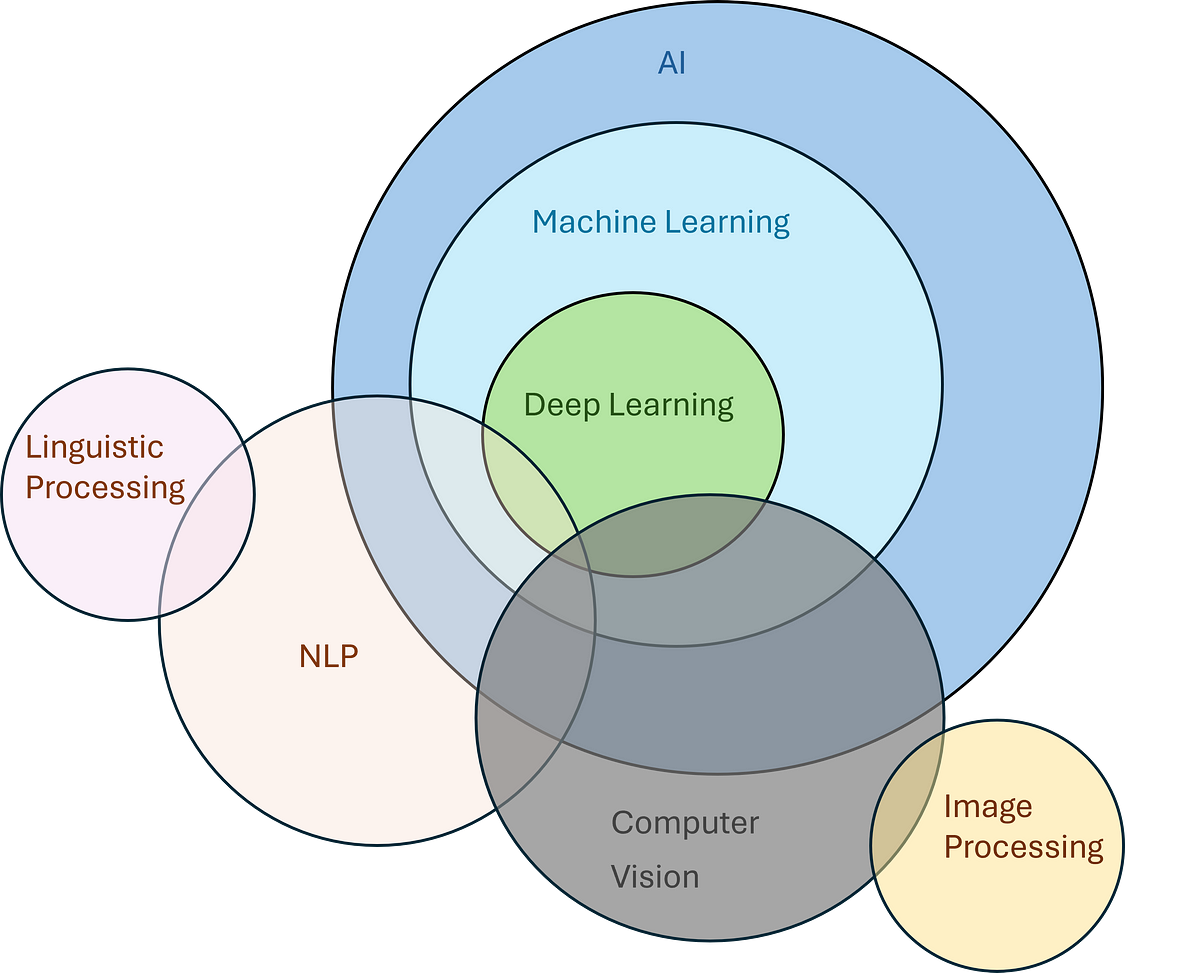

The most successful areas of application for deep learning so far have been Computer Vision (CV), where it all started, and more recently, Natural Language Processing (NLP). While research in Robotics is more active than ever, the translation from research to real-world applications is still a promise, not a reality. But why?

The successes of AI in the last decade were largely made possible by the simultaneous increase in computing power and data availability. This enabled the development of algorithms that could fully leverage these advancements. As a result, deep learning has transitioned from a niche subfield of machine learning to a transformative force across virtually all scientific and industrial domains.

The most successful areas of application for deep learning so far have been Computer Vision (CV), where it all started, and more recently, Natural Language Processing (NLP). While research in Robotics is more active than ever, the translation from research to real-world applications is still a promise, not a reality. But why?

One key reason is that both CV and NLP benefit from vast, publicly available data sources from the Internet (mainly text and images). Making this data compatible with deep learning techniques, usually through annotation, is relatively cheap today. However, the situation is very different for Robotics: there is no Internet-like source for robot-related data (due to the limited number of robots globally, the difficulty in defining what constitutes useful “robot data” and the limitations on sharing industrial/proprietary data), and annotating robot data is extremely challenging due to its multi-modal nature and the complexities of interacting with the environment.

One Potential Solution: Data Collection

One approach to solving this issue is to invest heavily in collecting high-quality, curated, and diverse data across a variety of robotic systems, repeating this process for each potential robotic task. Anecdotally, this was the starting point for modern deep learning in computer vision with the creation of ImageNet and its associated challenge: it took https://profiles.stanford.edu/fei-fei-li and her team 2 years to assemble, select, and label a set of 11 million images covering approximately 10,000+ object types, just for the task of Image Classification. Similarly, a consortium of 21 institutions, led by Google DeepMind, is undertaking a massive effort with Open X-Embodiment, a dataset sourced from 22 different robots and demonstrating 527 skills across 160,266 tasks. This effort is unprecedented in scale, mainly due to its immense resource costs, both in human-hours and robotic systems. Being a recent research development, its industrial impact will likely take years to materialize.

A Second Potential Solution: Simulation

Another approach is to bypass the limitations of hardware and physical environments by using simulation. Simulation, the virtual recreation of the physical world using only computational power, represents the holy grail for every data-driven system, especially in robotics.

Promises of Simulation for Robotics

The idealized goals of simulation for robotics are two-fold:

- Full Control of the Setup

The first goal is having complete control over the setup, both in terms of scene appearance and dynamics: what the scene looks like and how it behaves when parts move or interact with forces.

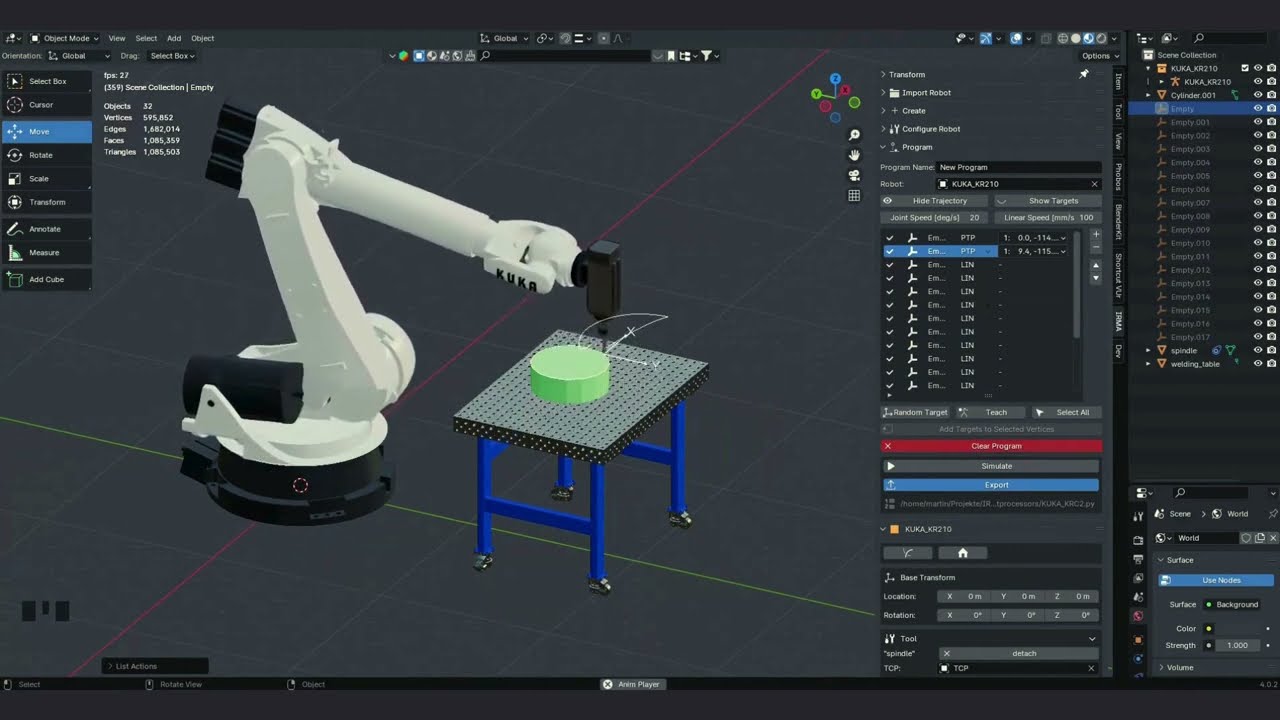

These two aspects are distinct problems: scene appearance is addressed by 3D rendering software (such as Blender in the open-source domain), while the dynamics are tackled by physics simulation software. These types of software are gradually merging, but each often specializes in one domain (for example, Blender has some physics capabilities but is not designed for complex friction simulations).

With full flexibility in both, we are no longer constrained by the limited set of objects available in real environments. The robot itself can also be modified at will, allowing for the development of robot-agnostic control strategies.

- Experiment Time/Compute Equivalence

The second objective of simulation is to free experiments and training runs from the constraints of robotic hardware (e.g., safety, maintenance, human supervision). With simulation, all that is required to run an experiment is to start a software program. More computing power allows us to either run experiments faster than real-time or conduct multiple simulations in parallel—potentially both.

From a machine learning perspective, particularly reinforcement learning, this enables the collection of data points at a rate that supports current, data-inefficient strategies (where thousands or even millions of trials may be necessary depending on task complexity).

The Dream

If these two goals are realized, there would be no limit to the variety of robot and scene combinations that could be used for data generation. Scaling up experiments would simply be a matter of adding more compute power. Once a task is defined, data acquisition could be completed, training conducted, and the resulting model transferred to the physical robotic setup. Since the scenes were perfectly rendered and physics-accurate, the robot should perform as expected based on the training—ideally, “out-of-the-box.”

The Sim2Real Gap

Unfortunately, neither scene rendering nor physical simulation is perfect, meaning they do not fully replicate the reality of the robotic system and its environment. As a result, an algorithm trained solely on simulated data cannot be guaranteed to perform similarly in a real-world scenario.

In practice, the outcomes are often catastrophic, as current machine learning algorithms struggle with domain shift (the discrepancy between training and testing data).

Discrepancies can arise at every stage of the simulation, but the primary issues are:

- Limited Fidelity of 3D Rendering: Achieving fully physics-accurate light simulation is prohibitively expensive. As a result, algorithmic simplifications have been developed over decades to improve photorealism. However, there are still limitations when balancing quality and rendering speed, especially if the simulation aims to run faster than real-time, leading to inaccuracies and unnatural visual artifacts.

- Limited Accuracy of Physical Simulation in Contact-Rich Environments: Robotics often involves interaction with the environment, making contact modeling a critical challenge. However, simulating realistic contact phenomena (such as material friction and deformation) remains an open problem.

- Noise Replication: Since the simulation feeds data to the robot’s sensors, which in turn feed the algorithms, it’s important to model sensor noise accurately. However, real sensors are inherently noisy, and simulating this noise profile is a challenge. Modeling the full physics and electronics of a sensor is infeasible (often due to intellectual property concerns), so the noise must be simulated to emulate the actual noise profile of the target sensor.

Closing the Gap

While perfect simulations remain out of reach, several strategies can help mitigate these issues. These strategies generally fall into the following categories:

- Learning on Simulation, Fine-Tuning on Real-Life Data: After training an algorithm on a large simulated dataset, it can be fine-tuned on a smaller set of real-world data. However, this approach can lead to catastrophic forgetting (the loss of general features from the larger dataset) and is difficult to execute correctly.

- Increase Domain Variability: Overfitting to an inaccurate simulation is a common problem. To counteract this, algorithms can be trained to recognize simulation variability in task-relevant data. By diversifying simulations as much as possible while maintaining the same task, we force the algorithm to generalize. The real world then becomes just another variable environment, and the algorithm can still extract key features.

- Tuning the Simulation with Real-Life Data: Instead of introducing variability, another approach is to tune the simulation output to closely match real-world data. This can be applied to all aspects of the simulation: 3D object synthesis, rendering (visual), and dynamics.

- Learning High-Level Policies: Learning high-level policies (e.g., “go there”) instead of low-level controllers (e.g., “move actuator joint X by Y degrees”) can reduce the impact of inaccuracies in hardware simulations.

At Inbolt, our main product focuses on adapting robot trajectories to match a part’s 3D pose. This means we need to track the part accurately, robustly, and in real-time.

Tracking works by comparing sensor data (an approximation of reality) to a simulated model of the part. This is where the sim2real gap comes in: if the difference is too large, tracking performance suffers, leading to issues like offsets, drift, or even total loss of tracking.

To maintain tracking quality, we rely heavily on the ‘Tuning the Simulation with Real-Life Data’ approach. This ensures that our raw models—whether from a CAD file or a 3D scan—are automatically processed to generate data that aligns closely with sensor data, even with the potential inaccuracies from both sides (sensor noise or 3D model limitations).

Explore more from Inbolt

Access similar articles, use cases, and resources to see how Inbolt drives intelligent automation worldwide.

Why the future of automation is being written by the automotive industry

Reliable 3D Tracking in Any Lighting Condition

The Circular Factory - How Physical AI Is Enabling Sustainable Manufacturing

NVIDIA & UR join forces with Inbolt for intelligent automation

KUKA robots just got eyes: Inbolt integration is here

Albane Dersy named one of “10 women shaping the future of robotics in 2025”

Want to Sound Smart About Vision‑Guidance for Robots?