Back

Blog

Exploring the potential of LLMs in robotics: an interview with Louis Dumas

Robotics has always been at the forefront of technological advancement, but translating human ideas into robotic actions remains one of its greatest challenges. In an interview with Louis Dumas, co-founder and CTO of inbolt, he shared his insights into the transformative potential and current limitations of Large Language Models (LLMs) in the robotics field.

What are LLMs?

Louis Dumas

Large Language Models, or LLMs, are advanced AI systems trained on vast amounts of text data to generate and understand human-like language. Examples include OpenAI’s GPT models (like ChatGPT), Google’s PaLM, and Meta’s LLaMA. These models simplify communication by enabling natural language interactions between humans and machines. While LLMs themselves are software-based and don’t have a physical form, they can be integrated into robots, virtual assistants, or embedded in devices like smart speakers, providing tangible ways for users to interact with them.

Why are LLMs significant in robotics?

In robotics, LLMs transform how we interact with robots, making them more accessible to non-technical users by enabling commands in natural language—everyday speech or text that doesn’t require programming skills or technical jargon. For example, a user can instruct a robot by saying, “Pick up the red box and place it on the shelf,” instead of writing complex code. LLMs also assist in programming by generating code snippets, automating repetitive coding tasks, and even debugging errors. This helps engineers design and implement robotic workflows more efficiently, significantly speeding up development processes. However, their role is currently limited to serving as an interface for processing language, rather than directly controlling robotic actions or handling physical tasks.

How do LLMs enhance traditional AI in robotics, particularly in human-robot interaction?

Beyond enabling more intuitive communication through natural-language commands, the development of Vision-Language Models (VLMs) and Foundation Models, created by companies like Google, further builds on traditional AI by enhancing how robots understand and interact with their environment.LLMs are particularly effective for tasks that primarily involve language, such as generating code, creating instructions, or answering user queries in natural language. On the other hand, VLMs excel in tasks that require integrating visual and linguistic information. For example, a VLM can help a robot identify an object based on a spoken command, like “Pick up the blue cup,” by combining its ability to process the visual data (recognizing the blue cup) with language comprehension. This allows robots to interpret their surroundings and take actions more effectively in real-world settings.

What are the main challenges of using LLMs in robotics?

LLMs were designed to process text, but the robotics field needs them to handle physical and visual data too. Tasks like picking up objects or navigating require a deeper understanding of the environment, which LLMs alone can’t provide.

What other limitations need to be addressed?

LLMs require extensive computational power and large datasets, making them costly due to high-performance hardware and cloud resources. While LLMs don’t directly control robots, their outputs can influence actions, raising safety concerns in unpredictable environments. Ensuring reliability requires rigorous testing, but no universal solution exists yet.

Looking ahead: the future of LLMs in robotics

The integration of Large Language Models (LLMs) into robotics will be gradual, as it depends on continuous research and technological advancements to overcome current challenges, such as computational demands, safety concerns, and real-world adaptability. Vision-Language Models (VLMs) specialize in combining visual and language data, enabling robots to “see” and understand their surroundings—for instance, recognizing objects and acting based on verbal commands like “Pick up the red book.” Foundation Models, on the other hand, focus on providing a broader, multi-modal intelligence that integrates data from various sources (e.g., vision, language, and sensory input), allowing robots to perform more complex, context-aware tasks. These advancements complement each other and together pave the way for robots that are both smarter and easier to use.

Explore more from Inbolt

Access similar articles, use cases, and resources to see how Inbolt drives intelligent automation worldwide.

The Circular Factory - How Physical AI Is Enabling Sustainable Manufacturing

From concept to reality, circular manufacturing should take center stage.

Across the manufacturing ...

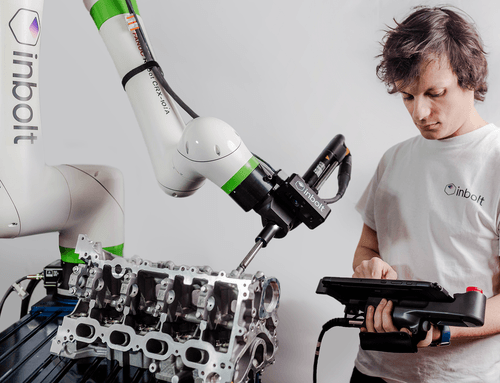

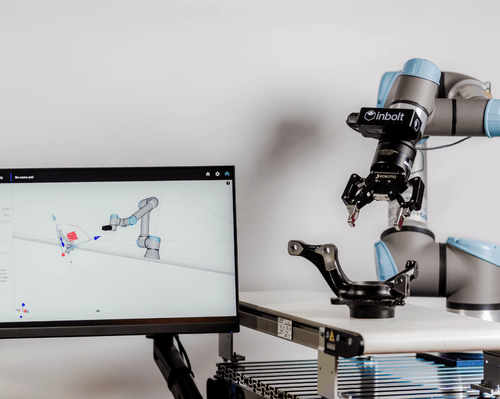

NVIDIA & UR join forces with Inbolt for intelligent automation

Bridging the gap between humans and robots: Inbolt, Universal Robots, and NVIDIA bring vision to fac...

KUKA robots just got eyes: Inbolt integration is here

For decades, KUKA robots have been a symbol of strength and precision on factory floors around the w...

Albane Dersy named one of “10 women shaping the future of robotics in 2025”

The International Federation of Robotics has recognized Albane Dersy, co-founder and COO of Inbo...

Want to Sound Smart About Vision‑Guidance for Robots?

Whether you’re new to the topic or looking to deepen your knowledge, here are 11 key terms...

Inbolt Joins NVIDIA Inception to Accelerate AI-Driven Automation

Inbolt joins the NVIDIA Inception program to boost its AI-driven automation solutions. This ...

Inbolt x UR AI Accelerator: Ushering in the Era of Vision-Guided Robotics by Default

Inbolt’s AI-powered software is now integrated into the NVIDIA-powered Universal Robots AI A...